The Modular Computing Opportunity

Where Blades, Grids, Networks,

System Software and Storage Converge

By John Harker

Principal Consultant, Technology Marketing

ZNA Communications

© 2003, John Harker and ZNA Communications. All Rights Reserved

What is Modular Computing?

A new computer architecture for enterprise servers has emerged centered around modular systems. It is being driven by the evolution in the data center to racks of a variety of computers and storage units instead of one or more proprietary Unix systems or mainframes. This new "convergence" market is being driven by rapid advances in high-speed networking, Internet and Web services interfaces, blade servers and grid computing. Soon all but the simplest servers will follow this modular systems model and a standard "server" will include multiple computers with shared storage. This evolution of modular computing systems is forcing computer professionals to rethink our model of the standard computer.

A computer architecture incorporates the nature and elements of the system and the way they are assembled. Traditionally a computer system has included a CPU, memory, I/O devices, and storage. It is assumed that there is an operating system that allows programs to run in that environment. It was also assumed that there was a great deal of overhead in accessing other systems or storage available via network. This helped drive a ‘single system centric’ model.

Now, the evolving data center is using multiple systems made up of a set of standard building blocks – network edge devices, load balancing devices, application servers and high-performance hardened database systems. These are deployed in three tier configurations, with front ends of network edge and load balancing devices, a second tier of banks of application servers in parallel, and a third tier of back-end high performance database servers. Much of the work being done is network transactions occurring across multiple systems.

High-speed networking, once the domain of custom switches and Fibre Channel, is rapidly advancing and becoming a commodity priced item. Gigabit Ethernet is now common and 10 Gigabit is becoming available. At those speeds and with the economies of volume manufacturing, interconnecting discrete computers at bus-level speeds will become practical and affordable.

Storage Area Networks (SANs) are changing the way storage is viewed as storage systems move from being a system attached resource to a network attached resource. This removes the performance penalty formerly associated with remote storage. SANs are being driven by their optimal use of disk capacity, along with the easier management and control of stored data.

There are three evolving areas of distributed systems software that enable modular computing: Web services, grid/clustered computing and distributed provisioning and management services. They are complementary in that they can be used together to solve the whole puzzle.

Web services decouple an application from any single operating system environment. Applications written to a Web services model can transparently access any other portion of the application via TCP/IP -- no matter what computer or operating system it is running on.

Grids, clusters and virtual operating systems enable processor power to be scaled to the application on demand. They also facilitate building fault-tolerant applications for improved reliability. After years as a proprietary high-end offering from OEMs such as IBM, DEC, Tandem, HP, and Seimens, clustering is becoming standards based and open system oriented. Grid computing offers the same advantages as clustering but over multiple operating systems and hardware platform without the need for tightly integrated system software. Both are complementary with Web services and are important elements of solutions that scale applications and make them more reliable.

Distributed provisioning and management software allows software installation and ongoing control of multiple servers and/or the individual blades in a blade server. Interestingly enough, blade servers are driving systems software vendors to improve their offerings since management and provisioning are fundamental requirements for blade server customers.

All of these elements are converging to define the new modular computing architecture in which the standard system environment consists of multiple computers of a variety of types with high-performance, networked access to a unified data store. In this converged environment, applications run over Internet, Web services, and grid computing interfaces that together make up a new ‘operating system’ that provide a uniform view and access to all of the systems in the server. Products implementing these elements will help each other establishing themselves in the market and greatly reduce the cost of ownership for customers of high-performance servers.

Blade Servers

Blades are computers on a card, designed to be plugged into a common bus in a rack-mounted chassis. Blades originally evolved in special-purpose industrial systems such as telecommunications switches. They have moved into the general-purpose business systems driven by the desire to save expensive space in data centers and colocation sites, as they allow for a greater density of computers per unit of space, use less power and allow for much simpler cabling. They also show promise in simplifying systems management and allowing flexible resource allocation (application and operating system provisioning) of systems.

Diagram 1: A blade system

Blade servers disaggregate many of the components usually found in rack-mounted computer systems. Individual blades have processor, memory, a disk drive, networking and I/O connectivity. They do not have individual power supplies, fans, CD-ROMs, floppy drives, keyboard and mouse ports – those are provided by the chassis and shared. Also, in a blade environment most storage is handled by separate storage devices, not the drive on the blade.

A current problem for blade servers is that most applications can run on (take advantage of) only one computer. Unless multiple copies of the application can be run in parallel they can make only limited use of the blade servers’ capabilities. Also, due to space and heat dissipation constraints, individual blades are typically not that powerful. Because of this blade servers are limited largely to tier 1 and some tier 2 type applications such as load-balancing relay servers or Web front-end servers. In the future, this limitation will be removed as two things happen - the evolution of the new software operating environment that allows grouping of blades together into a single system and the development of high-speed interconnections between the blades to remove the performance penalty of cross-computer processing. As this evolution occurs, new opportunities will open up on this new class of low-cost, high-power highly reliable servers.

Storage Area Networks

In the last decade as data centers added rack-mounted standard servers to their proprietary Unix and mainframe environments, high-bandwidth Storage Area Networks (SANs) have evolved as the best way to make blocks of data available to multiple systems. They have facilitated the consolidation of data centers and the move to server-centric computing. They have opened the way to new capabilities such as storage virtualization, which allows better management and more efficient use of storage resources. They have enabled better high-availability capabilities and backup and disaster recovery technologies and processes.

Diagram 2: A SAN

The emergence of blade servers with their limited space for onboard disk drives will accelerate this trend to move mass storage off the server and onto a network device of its own. In the new data center, storage will no longer be subordinate to a master server. Servers and storage devices have become peers and all servers will have the capability of equal high-speed access to stored data.

High-Speed Networks

Traditionally networking technologies and computer bus-oriented system I/O technologies have been seen as different things, with different requirements, and have been implemented with different technologies. But this is changing. The interconnection requirements and technologies for networking, storage connections, and computer backplane connections are rapidly converging.

With LANs, Ethernet has emerged as the dominant standard. Large volumes have enabled it to become a low-cost commodity and fund research investment that has steadily increased its speed. Currently Gigabit Ethernet devices are common and new 10Gigabit Ethernet products are being introduced. The drop in costs and increase in speeds will continue.

As open standard storage systems have become common, 2Gigabit Fibre Channel has been the most common interconnection mechanism. Inside the SAN switches, proprietary higher-speed, high-cost technologies are used in the 60-100 Gigabit range. Things are changing though – recently standards have been finalized for using the SCSI disk interface protocols over TCP/IP Ethernet connections (iSCSI). Equivalent or faster Ethernet will cost much less than Fibre Channel. So in conjunction with high-speed Ethernet technologies, iSCSI is expected to be rapidly adopted and used by SAN vendors and customers.

The current most common bus I/O standard is PCI. Device-to-device throughput with PCI can be up to 500Kbps. An improved standard, PCI-X, is being developed that among other things will allow this to be speed up to 10Gps. Originally it was envisioned that a new consortium driven standard, Inifiniband would be the technology used to implement PCI-X. But given the high speeds and low costs of Ethernet, there is now speculation that Ethernet used in a switch based topology may be a popular alternative. In either case it is clear that soon interconnecting discrete computers at bus-level speeds is becoming practical and economical.

The sum of all this is that it is rapidly becoming feasible to use the same connection technologies for networks, storage systems, and processor I/O devices to simplify the new modular computing environment both physically and also from an administrative standpoint. Furthermore, standardized, high-volume manufacturing will reduce the price.

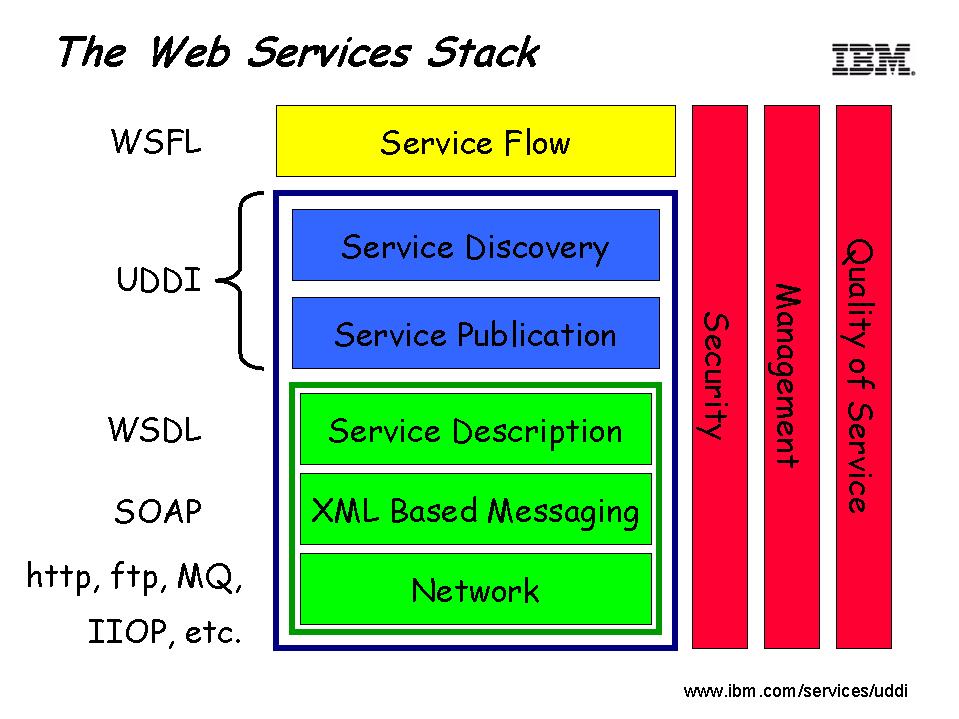

Web Services

Web services are a new and evolving paradigm for building distributed applications. A Web service is a service that is available over TCP/IP connections, uses a standard XML messaging system, and is not tied to any one operating system or programming language. Web service applications use standard protocols such as UDDI, WDSL and SOAP to be self-describing and discoverable so other applications can find and make use of them on a run-time basis. Writing applications to a Web services interface enables them to make use of multiple computers in a manner that is transparent to the application.

Web services are still in their infancy, but they are poised to revolutionize distributed application development. The standards for Web services are being driven by the World Wide Web Consortiums (W3C) XML protocol group, which will ensure multi-vendor interoperability.

Grid Computing and Clustering

Both grid computing and clustering provide leveraged computing power for applications by allowing them simultaneous use of multiple computers. They aggregate distributed computing resources to act as a unified processing resource within or across locations. A cluster is a distributed system environment over a set of closely coupled computers. A grid is a distributed application environment over a range of heterogeneous computers that retain individual system administration systems and practices. A grid achieves much the same things as a cluster without the need for tight integrated at a systems and operating systems level. Grids can be built using mixed operating systems and hardware platforms and utilize existing resources resulting in faster more efficient processing of compute intensive jobs

The unified environment of a grid or cluster coordinates usage policies, scheduling/queuing characteristics, fault recovery and security. It eliminates the need to bind specific machines to specific jobs. And it can eliminate the need to unnecessarily move or replicate data. Much as with the Internet, initial grid usage has primarily been by collaborative research communities who have a need to scale applications. Through the recent support of companies such as IBM and Oracle to make cluster- and grid-based Web services and databases available, future grids will run enterprise applications taking advantage of high-availability and load-balancing capabilities.

It is useful to categorize grids and clusters by function. These functions are not mutually exclusive, existing products often offer support for more than one. But products generally excel at a particular category. The three major types are:

Grids seem to be an area where open source technologies are edging out proprietary ones. Many vendors are currently selling mixed proprietary/open standards solutions, for example commercialized open source, or proprietary management but with open system foundations. There is an active open source movement as well.

On the horizon is a new set of standards called the Open Grid Services Architecture (OGSA) that will make it easier for companies to roll out grid applications that work across heterogeneous networks and the Web. The Global Grid Forum, whose hundreds of members include Sun and IBM, is developing OGSA. The group is currently finalizing the OGSA documents and they should be out in the very near future. The Globus Toolkit project sponsored by the Global Grid Forum is an open source implementation of OGSA

Current Grids/Clusters Target Markets:

It is expected that in the future many common business applications and databases will run on grids, adding many more target markets.

Provisioning and Management

In the data center or hosting center, manageability has long been a key issue for customers and a key differentiator for server vendors. Technologies have evolved to allow for remote installation, management and monitoring of servers in these situations. To date, many of these are proprietary and vendor specific, but this is changing.

The emergence of blade servers has made this even more of an issue. Blades don’t have individual consoles or installation devices, so they require remote provisioning and installation capabilities. And because blades are usually hot-swappable, administrators must be able to quickly discover new blades, identify the proper configuration and allocate the necessary images to the blades.

Management products for blades falls into one of four categories: change and configuration management; image cloning and management; provisioning; and policy-based management efforts. Policy-based management, in which systems can automatically deploy predefined actions (such as for configuration, security, provisioning and performance) across servers and other networks elements, remains the ultimate goal for vendors and users.

There are standards that exist that can be used in solving these problems, such as SNMP and Intel’s Intelligent Platform Management Interface, but much work remains to be done. To date most of the blade vendors are providing proprietary provisioning and management software with their blade systems, but there is an active emerging market in independent products with heterogeneous system support.

What Does Modular Computing Mean To You?

To many vendors in the computer industry, the emergence of the new modular computing model offers the opportunity -- or sometimes an imperative need! -- to drive more business. As it stands today, major players such as IBM, HP, Sun and Dell are poised to dominate this market. Other vendors will have to move expertly and quickly to carve a space in this new area. Technology suppliers that can benefit from the modular computing opportunity include:

Grid OEMs sell grid or cluster systems software. Their need is to reach new markets, which in the emerging world of modular computing means they want their products to be known to and respected by OEMs offering blade servers and customers who are buying them. Grid OEMs also will need to partner with segment appropriate ISVs, IHVs and VSPs who can provide the applications that take advantage of grid or clustering capabilities..

Independent software vendors (ISVs) and vertical solution providers (VSPs) sell horizontal and vertical (with respect to target markets) application and system software. For existing ISVs and VSPs, the opportunities are to develop an entry into the Web services market and to offer a grid and/or clustering option to their current product(s) to maintain and grow their market share. There are also large opportunities for systems management ISVs to develop completely remote server management and provisioning tools. ISVs and VSPs are often coupled to their tool/platform vendors and so in many cases they will need to learn about and acquire modular computing tools and development platforms before they can take advantage of this market. Tool vendors customers need development tools optimized to scale processing and improve application fault-tolerance to take advantage of modular server environments.

Blade server vendors sell blade computers or other parts that fit into a blade computer (such as a switch or blade management system). They need more types of applications support for blade servers. To a large extent, blade servers currently are only used for tier 1 processing (e.g. Web load balancing). New O/S virtualization, grid and cluster environments offer paths to greater blade utilization by tier 2 and tier 3 applications. Blade vendors need to show their customers how to use grids and clusters to take full advantage of blade servers and educate their customers regarding the various options and recommended operating environments, vendors and products.

Modular computing offers opportunities to storage vendors for SAN and NAS products. They need to ensure their products are compatible and support the advanced requirements of blade servers with system image IPL, fault tolerance and unified management. They also need to make sure their products are listed and recommended in modular computing forums.

To all these vendor and their customers alike, the emergence of modular computing is changing the face of information technology and opening new opportunities for those who recognize this impact of disruptive technologies. As always, such shifts will change the face of the market, with many existing companies going the way of the buggy whip and others breaking into new territory and ongoing success.